Recently, I have been developing a solution to extract data from a Log Analytics workspace using Azure Data Factory. At the time of writing, Azure Data Factory does not have a native connector for Log Analytics workspaces so the Log Analytics REST API must be used instead. These data will then be loaded into an Azure SQL database to be used for reporting.

Whilst it is possible to export M Queries straight out of Log Analytics into Power BI, this will not cater for long-term retention scenarios. A straightforward task I thought, initially. Wrong. In the next four posts, I will go over the steps required to setup the initial environment, extract the data, and finally process/load the data into the SQL database.

What is required to get started?

- Azure Subscription – Subscription Owner/Contributor and Azure AD permissions to deploy Service Principals

- PowerShell Core with the Az Module installed

- Please install if not already on your machine

- On Windows 10 you can get it in the Microsoft Store

- For other platforms such as Mac/Linux, you can get it here – PowerShell/PowerShell: PowerShell for every system! (github.com)

- In this post, I will be using Windows Terminal in PowerShell Core mode on Windows 10 but the regular PowerShell Core command prompt will suffice

- NB – I am not using Windows PowerShell in the interests of being OS agnostic so you can copy these steps on Mac, Linux etc

- Please install if not already on your machine

To speed things up, I have created a couple of ARM templates and reference PowerShell scripts over on my GitHub. The following PowerShell used in the following steps is all documented there. It is worth cloning this folder onto your local PC.

In later posts, we will need to deploy other resources, but these will be deployed as and when they are needed.

Deploying the initial resources

Reference PowerShell in GitHub repository for this section: ‘Deploy Initial Resources.ps1’

Deploy Initial Resources will create the following resources:

- Resource Group

- Azure KeyVault

- Log Analytics workspace

- ADLS Gen 2 Data Lake Account

- Azure Data Factory

(If you do not have an Azure Subscription – please create one before continuing)

- Open a PowerShell Core terminal in either Windows Terminal or a PowerShell Core terminal as an administrator/or with elevated permissions

- Install the Az PowerShell module. If this is already installed, then check for updates. Press A and Enter to trust the repository as it downloads and installs the latest modules. This may take a few minutes

##Run if Az PowerShell module is not already installed Install-Module Az -AllowClobber ##Run if Az PowerShell module is already installed but update check is required Update-Module Az

- Import the Az PowerShell module into the current session

Import-Module Az

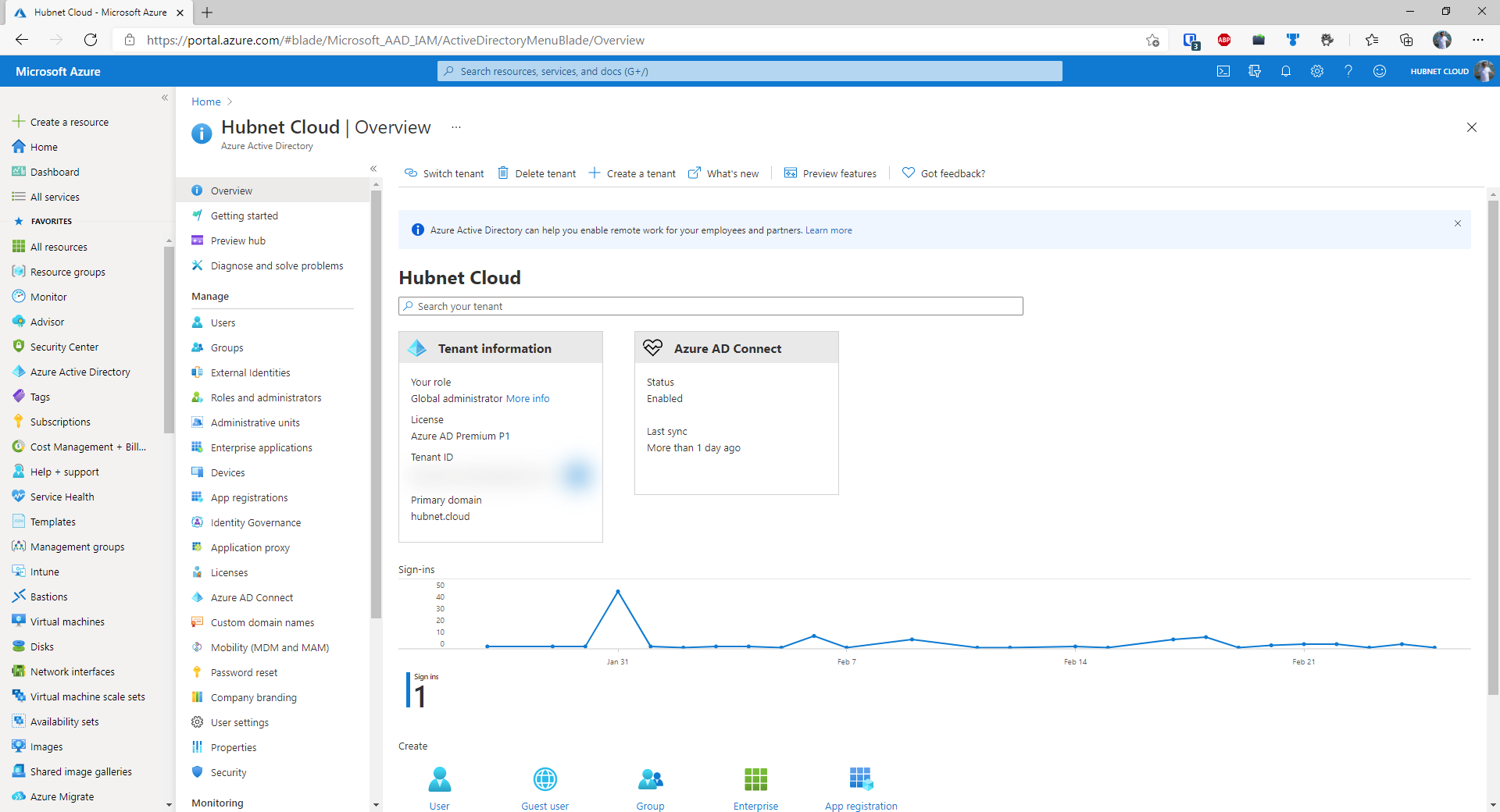

- Authenticate to Azure as shown below. If the tenant or subscription is different to the default associated with your Azure AD identity, this can be specified in the same line of code with the -Tenant or -Subscription switches. To find out the Tenant GUID, open the Azure Portal ensure that you are signed into the correct tenant and go to Azure Active Directory. The Directory GUID should be displayed on the home blade. To find the Subscription GUID, switch to the correct tenant in the Azure Portal and type in Subscriptions in the search bar and press Enter. The GUIDs for all subscriptions that you have access to in that tenant will be displayed.

Connect-AzAccount

- In the PowerShell terminal, find the short code for the Azure region nearest to you by typing the below

Get-AzLocation

- Set the variables that will be used throughout this deployment process

##Set paremeters to be used by ARM template $resourceGroupName = '<randomString>' $deploymentLocation = '<Shortcode from previous Step>' ##Must be a location property of the output from the previous command $resourceRandomName = '<randomString>' #Must be globally unique and sixteen characters or less $tenantId = (Get-AzTenant).Id $currentUser = (Get-AzContext).Account.Id $principalObjectId = (Get-AzADUser -UserPrincipalName $currentUser).Id

- Firstly, a resource group must be deployed to deploy the resources required for this scenario. The resource group will be named according to the value assigned to the $resourceGroupName variable

##Deploy the resource group New-AzSubscriptionDeployment -Location $deploymentLocation -TemplateFile 'arm-template-resource-group.json' -resourceGroupName $resourceGroupName -deploymentLocation $deploymentlocation

- Now that is deployed, deploy the resources into this group

##Deploy the resources New-AzResourceGroupDeployment -ResourceGroupName $resourceGroupName -TemplateFile 'arm-template-resources.json' -deploymentLocation $deploymentLocation -resourceRandomName $resourceRandomName -tenantId $tenantId -principalObjectId $principalObjectId

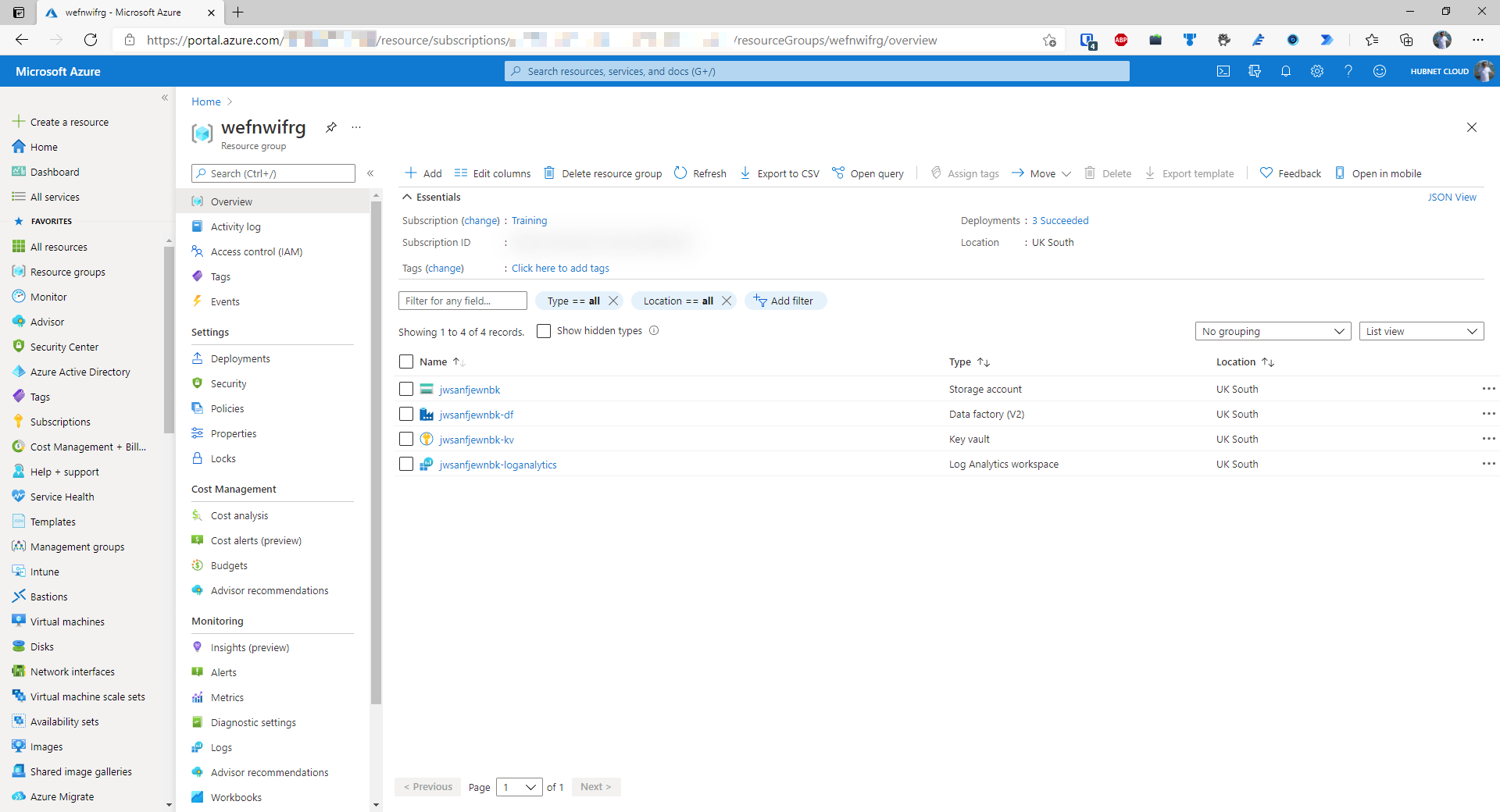

- Verify the newly deployed resources in the Portal

Creating the Azure AD Service Principal

The code reference for this next section is in the GitHub repo as ‘Deploy Service Principal and KeyVault Secrets.ps1’

To authenticate successfully using the Log Analytics REST API, an Azure AD Service Principal with scoped permissions should be created to allow communication via Azure Data Factory.

- First, set some variables to use in the following commands

##Set our variables $vaultName = $resourceRandomName+,"-kv" $servicePrincipalName = 'LogAnalyticsReaderService' $servicePrincipalScope = '/subscriptions/<subscriptionid>/resourceGroups/<resourcegroupname>/providers/Microsoft.OperationalInsights/workspaces/<workspacename>'

- Create Azure AD Service Principal

##Create Azure AD Service Principal $createServicePrincipal = New-AzADServicePrincipal -DisplayName $servicePrincipalName -Role 'Log Analytics Reader' -Scope $servicePrincipalScope

- Finally, save the client id and client secret in Key Vault

##Save service principal client id in KeyVault Set-AzKeyVaultSecret -VaultName $vaultName -Name 'log-analytics-reader-service-client-id' -SecretValue (ConvertTo-SecureString -AsPlainText ($createServicePrincipal.ApplicationId)) ##Save service principal secret in KeyVault Set-AzKeyVaultSecret -VaultName $vaultName -Name 'log-analytics-reader-service-secret' -SecretValue $createServicePrincipal.Secret

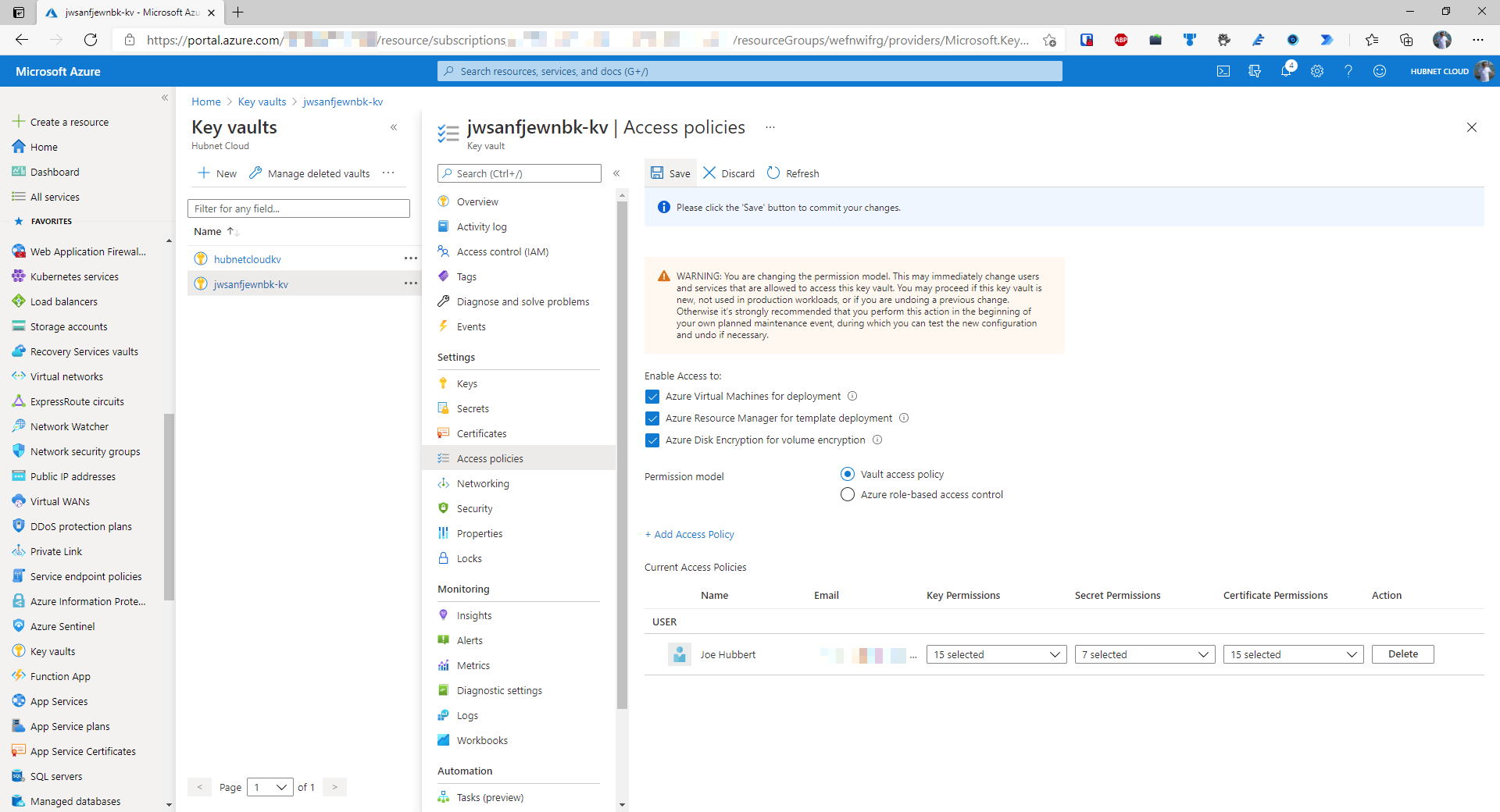

Setting the permissions for Azure Data Factory to read secrets from Azure Key Vault

- Navigate to the newly deployed Key Vault in the portal and go to the Access Policies blade. It will say that Azure role-based access control is turned on. That feature is currently in preview so would not be suitable for production deployment scenarios. Change the radio button to Vault access policy and click Save.

- Click on Add Access Policy

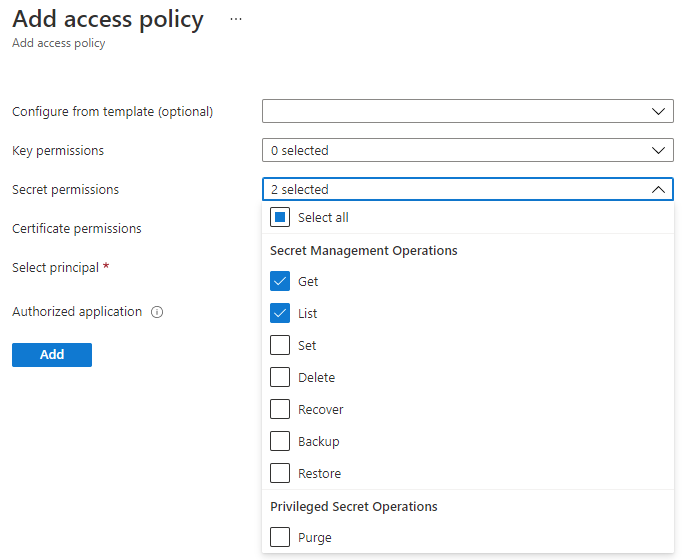

- Specify the scope of the permissions this policy, in this case, only Get and List secret permissions are needed

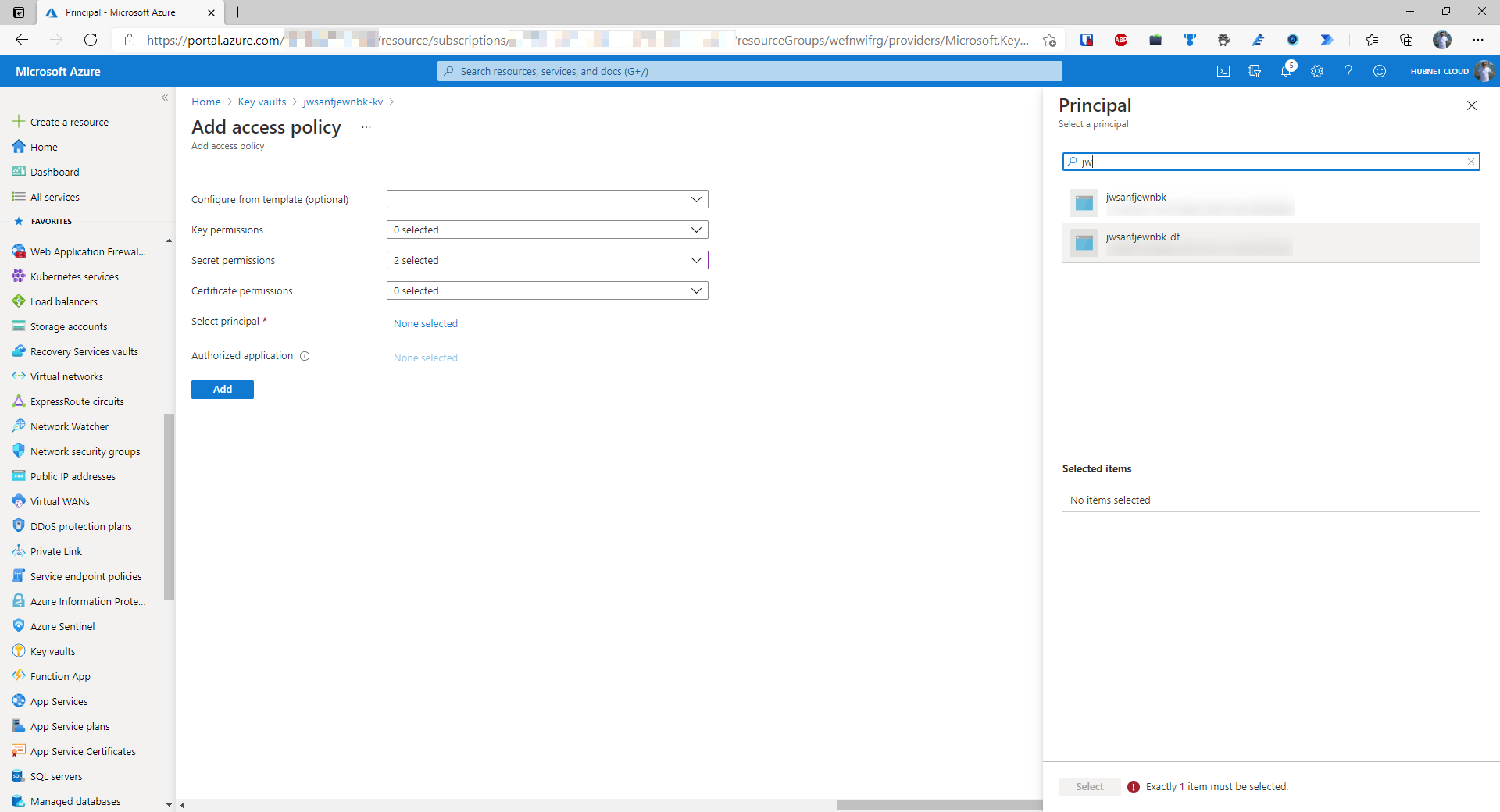

- Finally, this access policy should be scoped to a certain principal, in this case, the deployed Azure Data Factory. Search for the data factory and click Select. Ensure that the data factory is selected and not the resource group as the minimum level permissions should be applied

- Once the data factory is selected, click ‘Add’ and save the policy

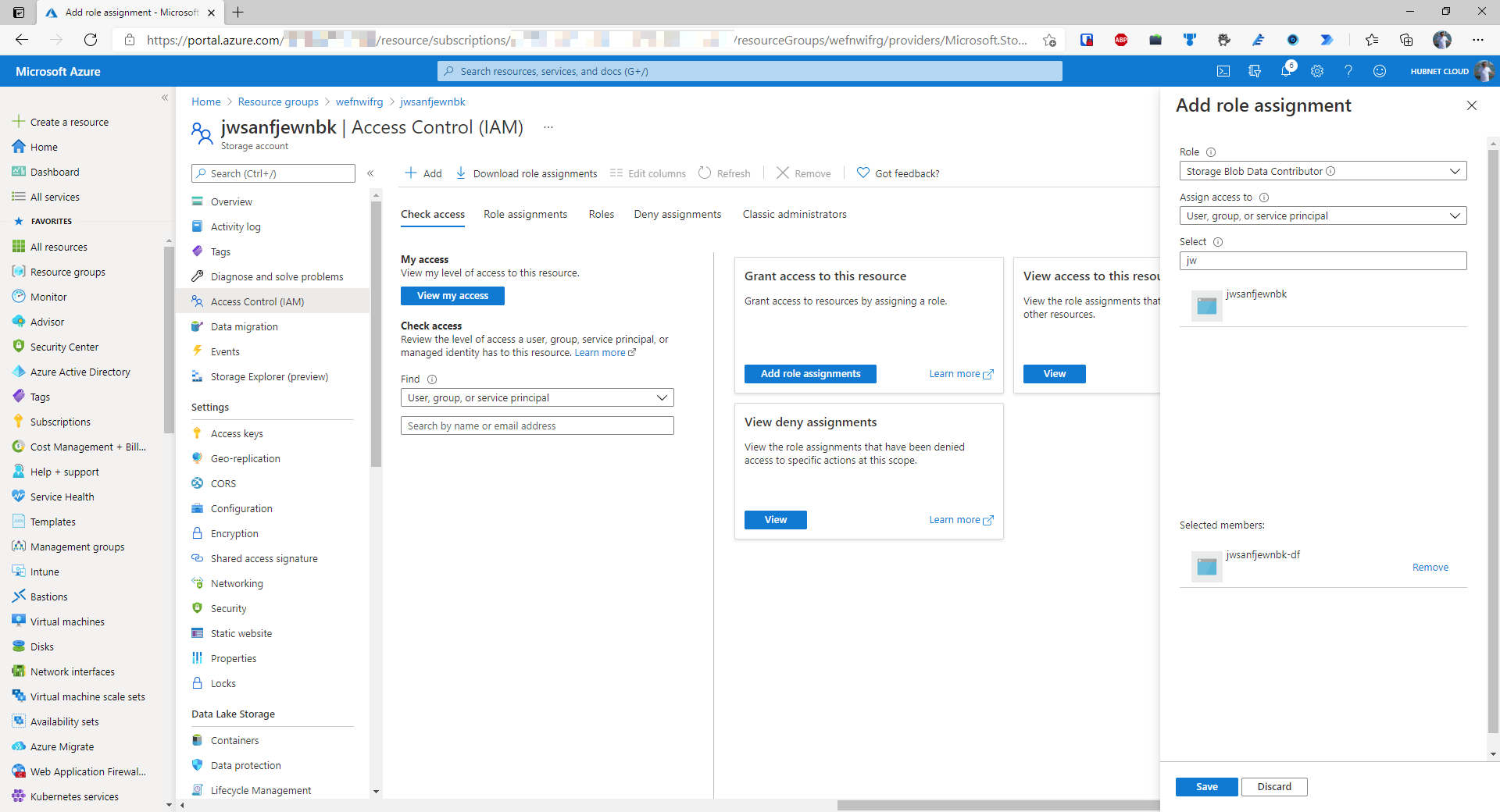

Setting permissions on the Azure Data Lake Gen 2 Storage for Azure Data Factory

- Navigate to the deployed data lake in the Azure Portal and go to the Access Control blade.

- Click Add

- Set the role to be Storage Blob Data Contributor

- In the search bar, find the deployed data factory and click Save

Conclusion

The initial core infrastructure required is set up and ready to go.

In part one, the following activities have been completed:

- Created resource group

- Deployed the following resources to resource group

- Azure Key Vault

- Azure Log Analytics workspace

- Azure Data Factory

- Azure Data Lake Storage Gen 2

- Created Azure AD Service Principal for Log Analytics Workspace

- Saved Azure AD Service Principal secrets in Azure Key Vault

- Set permissions for Azure Data Factory to access secrets in Azure Key Vault

- Set permissions for Azure Data Factory to read/write blobs in Azure Data Lake Storage Gen 2

Stay tuned for part 2 where we configure Azure Data Factory to extract the data from Log Analytics