In this next post in this series, we will take the data that has been transformed into a machine-readable form in Azure Databricks and store it in Azure SQL Database to be used later in reporting.

If you missed it, here is a link to Part 3.

All code used in this post is available in my GitHub here

Creating a logical Azure SQL server and database

You can ignore this step if not relevant but otherwise read on. We’ll need to create a logical Azure SQL server first to host our database and then the database afterwards.

- Open a PowerShell Core terminal in either Windows Terminal or a PowerShell Core terminal as an administrator/or with elevated permissions

- Install the Az PowerShell module. If this is already installed, then check for updates. Press A and Enter to trust the repository as it downloads and installs the latest modules. This may take a few minutes

##Run if Az PowerShell module is not already installed Install-Module Az -AllowClobber ##Run if Az PowerShell module is already installed but update check is required Update-Module Az

- Import the Az PowerShell module into the current session

Import-Module Az

- Authenticate to Azure as shown below. If the tenant or subscription is different to the default associated with your Azure AD identity, this can be specified in the same line of code with the -Tenant or -Subscription switches. To find out the Tenant GUID, open the Azure Portal ensure that you are signed into the correct tenant and go to Azure Active Directory. The Directory GUID should be displayed on the home blade. To find the Subscription GUID, switch to the correct tenant in the Azure Portal and type in Subscriptions in the search bar and press Enter. The GUIDs for all subscriptions that you have access to in that tenant will be displayed.

Connect-AzAccount

- Let’s set some variables set that we’ll reuse throughout the process

$resourceGroup = '<The same resource group that we have been using so far>' $serverName = $resourceGroup + '-sql' $databaseName = 'reportingDatabase' $location = '<The same location all the other resources have been deployed to e.g. uksouth>' ## currentUser is needed so we are able to login with our current user with Azure Active Directory authentication $currentUser = (Get-AzContext).Account $publicIpAddress = (Invoke-WebRequest -uri 'https://api.ipify.org/').Content ##We'll need to save the connection string of in our key vault for accessing the SQL database from Azure Data Factory $keyVault = '<The key vault we have been using>'

- Next, we’ll need to set the variable we’ll use for the administrator credentials

##You'll be prompted to enter a username and password that will be stored in this variable as a secure string $sqlAdminCredential = Get-Credential

- Now all the variables have been set, we can get started setting up the logical SQL server

New-AzSqlServer -ServerName $serverName -SqlAdministratorCredentials $sqlAdminCredential -Location $location -ServerVersion '12.0' -PublicNetworkAccess 'enabled' -MinimalTlsVersion '1.2' -ExternalAdminName $currentUser -ResourceGroupName $resourceGroup

- The server has now we been created, now we need to create a means for connecting to it in SQL Server Management Studio (SSMS) by implementing a server-level firewall rule using the public ip address variable we set earlier. If you use the public endpoint rather than private, run the second block of code to allow Azure Services to connect to your SQL server

New-AzSqlServerFirewallRule -FirewallRuleName 'AllowMyAccess' -StartIpAddress $publicIpAddress -EndIpAddress $publicIpAddress -ServerName $serverName -ResourceGroupName $resourceGroup ##Only run if using a public endpoint for your SQL server New-AzSqlServerFirewallRule -AllowAllAzureServices 'AllowMyAccess' -ServerName $serverName -ResourceGroupName $resourceGroup

- You should now be able to connect to your server in SSMS using your Azure Active Directory (AAD) credentials. The sqladmin credentials will not work in later steps as you will need to authenticate to AAD for setting up service principal access for ADF later

- Go back to your Windows Terminal and we’ll proceed with creating the database

##The bytesize shown is 2GB which at the time of writing is the smallest Azure SQL Database offering in the Basic series of the DTU pricing model New-AzSqlDatabase -DatabaseName $databaseName -ResourceGroupName $resourceGroup -ServerName $serverName -BackupStorageRedundancy 'Local' -Edition 'Basic' -LicenseType 'BasePrice' -MaxSizeBytes 2147483648

- If you go back to your SSMS object explorer and refresh, you should see newly created database. It should also show in the Azure Portal in your resource group

Assigning the correct permissions for the Data Factory managed identity on the SQL Server/Database

- Open a new query in the context of the master database and run below

--1. Create SQL Server login for the service princpal for the Azure Data Factory instance in the context of the master database CREATE LOGIN [<Name of our data factory>] FROM EXTERNAL PROVIDER

- Open a new query in the context of the reportingDatabase database

--2. Create database user for the login that was just created in the context of the reportingDatabase CREATE USER [<Name of our data factory>] FROM LOGIN [<Name of our data factory>] --3. Add database user to database reader and writer roles ALTER ROLE [db_datareader] ADD MEMBER [<Name of our data factory>] ALTER ROLE [db_datawriter] ADD MEMBER [<Name of our data factory>] --4. Create database role to execute stored procedures that we'll need to merge in the data on an ongoing basis to avoid data duplication CREATE ROLE [db_spexector] GRANT EXECUTE TO [db_spexector] ALTER ROLE [db_spexector] ADD MEMBER [<Name of our data factory>]

Storing the connection string in Azure Key Vault

- Go back to your PowerShell session

- Run the below command to save the connection string in your key vault

##Save the connection string to our SQL database in Azure Key Vault $connectionString = ConvertTo-SecureString -String ('Data Source=' + $serverName + '.database.windows.net;Initial Catalog=' + $databaseName + ';') -AsPlainText Set-AzKeyVaultSecret -VaultName $keyVault -Name 'sql-connection-string' -SecretValue $connectionString

Adding the Azure SQL Database as a linked service in Azure Data Factory

- Open your previously created Data Factory

- Click on the

icon

icon - Click on the Linked services blade

- Click on + New

- Choose Azure SQL Database

- Give your linked service a name such as LS_Reporting_SQL

- Change the connection type to be Azure Key Vault

- Select the key vault linked service we created earlier

- Choose the secret we just created in PowerShell

- Ensure the Authentication type is set to be Sql Authentication or Managed Identity

- Click Test connection to ensure Data Factory can connect to your database. If not, make sure that the relevant firewall rules are in place on the SQL server to allow access from Data Factory

- Click Create

Populating the database with dimension tables and creating staging/fact tables

- In the case of our fact dataset, there are some dimension table we would want to import to help slice the data, DimDate and DimHTTPCode. Here’s the code that I will use to create and populate these tables

--Run in the context of reportingDatabase --1. Create DimDate table CREATE TABLE [dbo].[DimDate] ( [Date_Value] DATE NOT NULL, [Day_Number_Month] TINYINT NOT NULL, [Day_Number_Week] TINYINT NOT NULL, [Week_Number_CY] TINYINT NOT NULL, [Month_Number_CY] TINYINT NOT NULL, [Quarter_Number_CY] TINYINT NOT NULL, [Day_Name_Long] NVARCHAR(9) NOT NULL, [Day_Name_Short] NCHAR(3) NOT NULL, [Month_Name_Long] NVARCHAR(9) NOT NULL, [Month_Name_Short] NCHAR(3) NOT NULL, [Quarter_Name_CY] NCHAR(2) NOT NULL, [Year_Quarter_Name_CY] NVARCHAR(7) NOT NULL, [IsWeekday] BIT NOT NULL, [IsWeekend] BIT NOT NULL, CONSTRAINT [PK_DimDate] PRIMARY KEY CLUSTERED ( [Date_Value] ASC )WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON, OPTIMIZE_FOR_SEQUENTIAL_KEY = OFF) ON [PRIMARY] ) ON [PRIMARY] GO --Populate DimDate DECLARE @StartDate AS DATE SET @StartDate = '2020-01-01' DECLARE @EndDate AS DATE SET @EndDate = '2025-12-31' DECLARE @LoopDate AS DATE SET @Loopdate = @StartDate --Sets Monday to be the first day of the week SET DATEFIRST 1 WHILE @Loopdate < @EndDate BEGIN INSERT INTO [dbo].[DimDate] VALUES ( -- [Date_Value] @LoopDate, --[Day_Number_Month] DATEPART(DAY, @LoopDate), -- [Day_Number_Week] DATEPART(WEEKDAY, @LoopDate), -- [Week_Number_CY] DATENAME(WEEK, @LoopDate), -- [Month_Number_CY] MONTH(@LoopDate), --[Quarter_Number_CY] DATEPART(QUARTER, @LoopDate), -- [Day_Name_Long] DATENAME(WEEKDAY, @LoopDate), --[Day_Name_Short] FORMAT(@LoopDate, 'ddd'), -- [Month_Name_Long] DATENAME(MONTH, @LoopDate), -- [Month_Name_Short] FORMAT(@LoopDate, 'MMM'), -- [Quarter_Name_CY] CONCAT ('Q',DATEPART(QUARTER, @LoopDate)), -- [Year_Quarter_Name_CY] CONCAT (YEAR(@LoopDate),'-Q',DATEPART(QUARTER, @LoopDate)), --[IsWeekday] CASE WHEN DATEPART(WEEKDAY, @LoopDate) IN (1,2,3,4,5) THEN 1 ELSE 0 END, -- [IsWeekend] CASE WHEN DATEPART(WEEKDAY, @LoopDate) IN (6,7) THEN 1 ELSE 0 END ) SET @Loopdate = DATEADD(DAY, 1, @LoopDate) END --Run in the context of reportingDatabase --1.Create DimHttpCode table CREATE TABLE [dbo].[DimHTTPCode] ( [Http_Code_Key] INT IDENTITY(1,1) NOT NULL, [Http_Error_Code] INT NOT NULL, [Http_Error_Code_Description] NVARCHAR(150) NOT NULL, CONSTRAINT [PK_DimHTTPCode] PRIMARY KEY CLUSTERED ( [Http_Code_Key] ASC )WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON, OPTIMIZE_FOR_SEQUENTIAL_KEY = OFF) ON [PRIMARY] ) ON [PRIMARY] --2.Populate DimHttpCode table INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('100','Continue') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('101','Switching Protocols') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('102','Processing') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('103','Early Hints') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('200','OK') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('201','Created') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('202','Accepted') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('203','Non-Authoritative Information') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('204','No Content') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('205','Reset Content') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('206','Partial Content') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('207','Multi-Status') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('208','Already Reported') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('226','IM Used') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('300','Multiple Choices') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('301','Moved Permanently') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('302','Found') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('303','See Other') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('304','Not Modified') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('305','Use Proxy') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('306','(Unused)') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('307','Temporary Redirect') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('308','Permanent Redirect') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('400','Bad Request') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('401','Unauthorized') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('402','Payment Required') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('403','Forbidden') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('404','Not Found') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('405','Method Not Allowed') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('406','Not Acceptable') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('407','Proxy Authentication Required') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('408','Request Timeout') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('409','Conflict') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('410','Gone') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('411','Length Required') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('412','Precondition Failed') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('413','Content Too Large') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('414','URI Too Long') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('415','Unsupported Media Type') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('416','Range Not Satisfiable') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('417','Expectation Failed') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('418','(Unused)') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('421','Misdirected Request') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('422','Unprocessable Content') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('423','Locked') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('424','Failed Dependency') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('425','Too Early') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('426','Upgrade Required') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('427','Unassigned') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('428','Precondition Required') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('429','Too Many Requests') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('430','Unassigned') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('431','Request Header Fields Too Large') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('451','Unavailable For Legal Reasons') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('500','Internal Server Error') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('501','Not Implemented') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('502','Bad Gateway') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('503','Service Unavailable') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('504','Gateway Timeout') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('505','HTTP Version Not Supported') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('506','Variant Also Negotiates') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('507','Insufficient Storage') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('508','Loop Detected') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('509','Unassigned') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('510','Not Extended (OBSOLETED)') INSERT INTO [dbo].[DimHTTPCode] ([Http_Error_Code], [Http_Error_Code_Description]) VALUES ('511','Network Authentication Required') - Let’s create the staging and fact tables

--Run in the context of reportingDatabase CREATE TABLE [dbo].[staging_factHubnetCloudWebsiteStats]( [timeGenerated] DATETIME2 NULL, [userAction] NVARCHAR(500) NULL, [appUrl] NVARCHAR(500) NULL, [successFlag] BIT NULL, [httpResultCode] INT NULL, [durationOfRequestMs] FLOAT NULL, [clientType] NVARCHAR(50) NULL, [clientOS] NVARCHAR(50) NULL, [clientCity] NVARCHAR(50) NULL, [clientStateOrProvince] NVARCHAR(50) NULL, [clientCountryOrRegion] NVARCHAR(50) NULL, [clientBrowser] NVARCHAR(50) NULL, [appRoleName] NVARCHAR(50) NULL, [requestDate] DATE NULL, [requestHour] TINYINT NULL, [adfPipelineRunId] NVARCHAR(50) NOT NULL, [adfCopyTimestamp] DATETIME2 NOT NULL ) ALTER TABLE [dbo].[staging_factHubnetCloudWebsiteStats] ADD DEFAULT GETUTCDATE() FOR [adfCopyTimestamp] --Run in the context of reportingDatabase CREATE TABLE [dbo].[FactHubnetCloudWebsiteStats]( [Request_Timestamp_UTC] DATETIME2 NULL, [Request_Date_UTC] DATE NULL, [Request_Hour_UTC] TINYINT NULL, [Request_User_Action] NVARCHAR(500) NULL, [Request_App_URL] NVARCHAR(500) NULL, [Request_Success_Flag] BIT NULL, [Request_HTTP_Code] INT NULL, [Request_Duration_Milliseconds] FLOAT NULL, [Request_Client_Type] NVARCHAR(50) NULL, [Request_Client_OS] NVARCHAR(50) NULL, [Request_Client_Browser] NVARCHAR(50) NULL, [Request_Client_City] NVARCHAR(50) NULL, [Request_Client_State_Or_Province] NVARCHAR(50) NULL, [Request_Client_Country_Or_Region] NVARCHAR(50) NULL, [Request_App_Role_Name] NVARCHAR(50) ) GO ALTER TABLE [dbo].[FactHubnetCloudWebsiteStats] ADD CONSTRAINT [FK_FactHubnetCloudWebsiteStats_DimDate] FOREIGN KEY([Request_Date_UTC]) REFERENCES [dbo].[DimDate] ([Date_Value]) GO ALTER TABLE [dbo].[FactHubnetCloudWebsiteStats] ADD CONSTRAINT [FK_FactHubnetCloudWebsiteStats_DimHTTPCode] FOREIGN KEY([Request_HTTP_Code]) REFERENCES [dbo].[DimHTTPCode] ([Http_Code_Key]) GO - If we were storing the data in a Kimball data warehouse we would want to ensure that the schema conforms to a star schema. We have partially followed that by adding the date and HTTP code dimensions by implementing foreign key relationships. If we were going to completely follow the Kimball methodology we would want to create dimensions for other datapoints like the User Action Type, Client OS, Client Type, Client City/State/Country. This is so we can optimise our data storage as much as possible. For example, it takes much less space in the fact table to store integers for a foreign key rather than a string value. Conversely, SQL Server also performs much better when joining on integer values when compared to string. We also would not allow nullable values in the final fact tables

Creating the stored procedure to merge staging data into the fact table

In ADF, we simply could just have a copy data task that would transfer the cleaned data produced by Databricks straight into the fact table. However, there is a large risk of data duplication depending on how often your ADF pipelines are executed and which time period has been specified in your Log Analytics function. In this case, we are going to create a stored procedure that will select any newly copied records to the staging table in the current pipeline run. It will then join that dataset to relevant tables/columns (in this case just DimHTTPCode) to obtain the foreign/surrogate key value to be used in the fact table. We will also rename columns so they match our final table schema to make for cleaner code

--Run in the context of reportingDatabase

CREATE PROCEDURE [dbo].[sp_MergeWebsiteStatsToLive]

@adfPipelineRunId NVARCHAR(36)

AS

--Selects all of our newly added records into a temp table and join to the correct records in the dimension tables where required.

--In this case, it's only DimHTTPCode where we want to return a value from as we are pulling the primary/surrogate key column from that table instead

--of writing the HTTP Code value explicitly each time. We are not doing any joins to DimDate as it is a 1-1 join with the same value on each

--side of the relationship. We will also rename columns in the temp table so they match the schema of the final fact table

SELECT

STG.[timeGenerated] AS [Request_Timestamp_UTC],

STG.[requestDate] AS [Request_Date_UTC],

STG.[requestHour] AS [Request_Hour_UTC],

STG.[userAction] AS [Request_User_Action],

STG.[appUrl] AS [Request_App_URL],

STG.[successFlag] AS [Request_Success_Flag],

DHC.[Http_Code_Key] AS [Request_HTTP_Code],

STG.[durationOfRequestMs] AS [Request_Duration_Milliseconds],

STG.[clientType] AS [Request_Client_Type],

STG.[clientOS] AS [Request_Client_OS],

STG.[clientBrowser] AS [Request_Client_Browser],

STG.[clientCity] AS [Request_Client_City],

STG.[clientStateOrProvince] AS [Request_Client_State_Or_Province],

STG.[clientCountryOrRegion] AS [Request_Client_Country_Or_Region],

STG.[appRoleName] AS [Request_App_Role_Name]

INTO #WebsiteStatsNewRecords

FROM [dbo].[staging_factHubnetCloudWebsiteStats] STG

LEFT JOIN [dbo].[DimHTTPCode] DHC ON STG.[httpResultCode] = DHC.[Http_Error_Code]

WHERE STG.[adfPipelineRunId] = @adfPipelineRunId

--Now we're going to merge in any records that do not already exist in the fact table so we avoid data duplication

MERGE INTO [dbo].[FactHubnetCloudWebsiteStats] DST

USING #WebsiteStatsNewRecords SRC

ON DST.[Request_Timestamp_UTC] = SRC.[Request_Timestamp_UTC]

AND DST.[Request_Date_UTC] = SRC.[Request_Date_UTC]

AND DST.[Request_Hour_UTC] = SRC.[Request_Hour_UTC]

AND DST.[Request_User_Action] = SRC.[Request_User_Action]

AND DST.[Request_App_URL] = SRC.[Request_App_URL]

AND DST.[Request_Success_Flag] = SRC.[Request_Success_Flag]

AND DST.[Request_HTTP_Code] = SRC.[Request_HTTP_Code]

AND DST.[Request_Duration_Milliseconds] = SRC.[Request_Duration_Milliseconds]

AND DST.[Request_Client_Type] = SRC.[Request_Client_Type]

AND DST.[Request_Client_OS] = SRC.[Request_Client_OS]

AND DST.[Request_Client_Browser] = SRC.[Request_Client_Browser]

AND DST.[Request_Client_City] = SRC.[Request_Client_City]

AND DST.[Request_Client_State_Or_Province] = SRC.[Request_Client_State_Or_Province]

AND DST.[Request_Client_Country_Or_Region] = SRC.[Request_Client_Country_Or_Region]

AND DST.[Request_App_Role_Name] = SRC.[Request_App_Role_Name]

WHEN NOT MATCHED BY TARGET THEN INSERT

(

[Request_Timestamp_UTC],

[Request_Date_UTC],

[Request_Hour_UTC],

[Request_User_Action],

[Request_App_URL],

[Request_Success_Flag],

[Request_HTTP_Code],

[Request_Duration_Milliseconds],

[Request_Client_Type],

[Request_Client_OS],

[Request_Client_Browser],

[Request_Client_City],

[Request_Client_State_Or_Province],

[Request_Client_Country_Or_Region],

[Request_App_Role_Name]

)

VALUES

(

SRC.[Request_Timestamp_UTC],

SRC.[Request_Date_UTC],

SRC.[Request_Hour_UTC],

SRC.[Request_User_Action],

SRC.[Request_App_URL],

SRC.[Request_Success_Flag],

SRC.[Request_HTTP_Code],

SRC.[Request_Duration_Milliseconds],

SRC.[Request_Client_Type],

SRC.[Request_Client_OS],

SRC.[Request_Client_Browser],

SRC.[Request_Client_City],

SRC.[Request_Client_State_Or_Province],

SRC.[Request_Client_Country_Or_Region],

SRC.[Request_App_Role_Name]

);

--Some logic to control the size of your staging table by only keeping limited history, in this case, 1 week

DELETE FROM [dbo].[staging_factHubnetCloudWebsiteStats]

WHERE [adfCopyTimestamp] < DATEADD(WEEK,-1,GETUTCDATE())

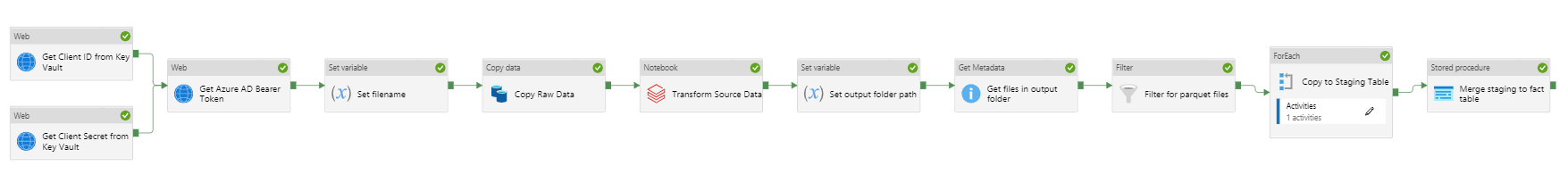

Implementing the final copy and merge logic into your ADF pipeline

We’re almost ready to implement the logic into our ADF pipeline, this is the final stretch if you will, of this part of the process. First, we need to create some new datasets

- Open up the authoring mode in ADF Studio

- Go to Datasets

- Click the plus to add new

- Select Azure Data Lake Storage Gen 2 as the data store and click Continue

- Set the format to be Parquet (We are using Parquet as it is much more efficient than JSON/CSV at compressing datasets)

- Name your dataset DS_Datalake_Parquet

- Click OK

- Now we’re going to parametrise the file path so it can be used by multiple pipelines

- Go to the Parameters tab

- Create a new string parameter called container

- Create another string parameter called folderPath

- Create another string parameter called fileName

- Go back to the Connection tab and assign the container parameter to the File system field with the following dynamic content code

@dataset().container

- Assign the folderPath parameter to the Directory field using the following dynamic content code

@dataset().folderPath

- Assign the fileName parameter to the File field using the following dynamic content code

@dataset().fileName

- Create another parquet dataset, same instructions as before called DS_Datalake_Parquet_Wildcard

- Go to the Parameters tab

- Create a new string parameter called container

- Create another string parameter called folderPath

- Go back to the Connection tab and assign the container parameter to the File system field with the following dynamic content code

@dataset().container

- Assign the folderPath parameter to the Directory field using the following dynamic content code

@dataset().folderPath

- Create another dataset

- Select Azure SQL Database as the data store click Continue

- Set the name to be DS_Azure_SQL_Staging_Website_Stats

- Set the table name to be your staging table that you created earlier

- Open up your pipeline in ADF

- We need to set a new pipeline variable called outputFolderPath

- We can set this in the Variables tab at the bottom of the authoring window as before

- Set the type to be String

- Add a new Set variable activity and have it run after successful execution of your Databricks notebook activity. Name it Set output folder path in the General tab

- The value we want to give will be an expression, populate using the Add Dynamic Content link that appears when you click into the value text field

-

@activity('Transform Source Data').output.runOutput

-

- Afterwards, we need to get the list of files in the folder specified in the variable that we just declared the value for. To get this, drag a Get Metadata activity into your pipeline and have it as a successor to Set output folder path

- Call this activity Get files in output folder in the General tab

- In the Settings tab, set the Dataset to be DS_Datalke_Parquet_Wildcard

- For the container parameter we will pass in the text value ‘loganalytics’ as this is the name of our container in the datalake

- For the folderPath parameter we will pass in the following dynamic content value

@variables('outputFolderPath') - Under Field list, click New

- Choose Child items

- Next we need to add a Filter activity to our pipeline as a successor to Get files in output folder so we only show parquet files. This should be under the Iteration & conditionals header

- Name this activity Filter for parquet files

- In the Items field, assign the following dynamic content value

@activity('Get files in output folder').Output.childItems - In the Condition field, assign the following dynamic content value

@endswith(item().name,'snappy.parquet')

- Sometimes the datasets produced by Databricks are so large that multiple parquet files are produced. To account for this, we need to add a ForEach activity from under the Iteration & conditionals header rather than a copy data activity. This should be a successor to the Filter for parquet files activity

- Name this ForEach activity Copy to Staging Table in the General tab

- Go the Settings tab and tick the Sequential check box

- In the Items field enter the following dynamic content

@activity('Filter for parquet files').output.Value - Go to the Activities tab and click on the pencil icon

- Drag a Copy data activity from the Move & transform header into the canvas

- Name this activity Copy to Azure SQL in the General tab

- In the Source tab, set the Source dataset to be DS_Datalake_Parquet

- For the container parameter set this to be the static string ‘loganalytics’

- For the folderPath parameter set this to have the following dynamic content

@variables('outputFolderPath') - For the fileName parameter set this to have the following dynamic content

@item().name

- Go to the Sink tab and set this to be DS_Azure_SQL_Staging_Website_Stats

- Go the Mapping tab

- Click on Import schemas (This is assuming you ran a Debug on the pipeline after implementing Databricks. If not, please debug your pipeline by placing a break point on the Databricks activity and then debug the pipeline. Remove once completed)

- Open Azure Storage Explorer, either in your browser or the desktop application

- Locate the parquet file from the output of the Databricks run and copy its path

- Paste the folder path into the @variables(‘outputFolderPath’) field ensuring that you leave a trailing /

- Paste the filename into the @item().name field and click OK

- All the columns should be mapped apart from the adfPipelineRunId and adfCopyTimestamp columns

- Ensure the mapping for adfPipelineRunId is set to the pipelineRunId column

- Remove the mapping for adfCopyTimestamp as that column has a default value already

- Go back to your main pipeline

- Add a Stored procedure activity from the General header as a successor to the Copy to Staging Table ForEach loop

- Name this activity Merge staging to fact table in the General tab

- In the Settings tab, set the Linked Service field to be DS_Azure_SQL

- Set the stored procedure to be the one we created earlier, in this case sp_MergeWebsiteStatsToLive

- Add a new stored procedure parameter called adfPipelineRunId or whatever you specified in your stored procedure creation of the type String

- Add the following dynamic content as the value

@pipeline().RunId

- Click Publish at the top of the authoring window for your changes to save.

- Click on Debug

Summary

There we have it, we now have copied our data into the Azure SQL database using ADF ready for reporting.

With that, that concludes this series on extracting data from Log Analytics